This is a concise introduction of Variational Autoencoder (VAE).

An Introduction to Bloom Filter

When we check and filter out the duplicates for a web crawler, bloom filter is a good choice to curtail the memory cost. Here is a brief introduction.

Likelihood-based Generative Models II: Flow Models

Flow models are used to learn continuous data.

Likelihood-based Generative Models I: Autoregressive Models

The brain has about 1014 synapses and we only live for about 109 seconds. So we have a lot more parameters than data. This motivates the idea that we must do a lot of unsupervised learning since the perceptual input (including proprioception) is the only place we can get 105 dimensions of constraint per second.

(Geoffrey Hinton)

Neural Network Tricks

Techniques of NN training. Keep updating.

BERTology: An Introduction!

This is an introduction of recent BERT families.

Efficient Softmax Explained

Softmax encounters large computing cost when the output vocabulary size is very large.

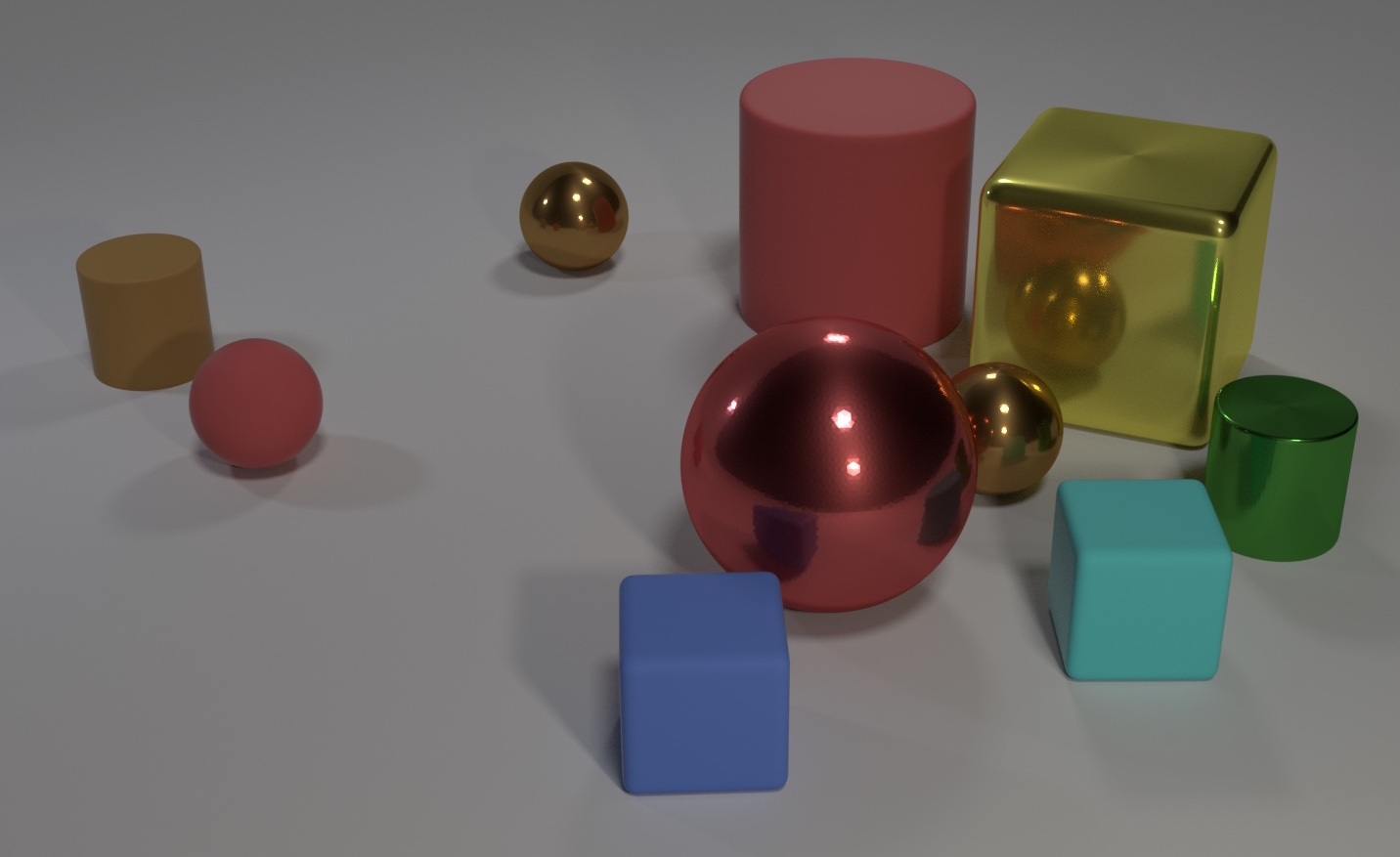

Relational Reasoning Networks

Reasoning the relations between objects and their properties is a hallmark of intelligence. Here are some notes about the relational reasoning neural networks.

Transformer Variants: A Peek

This is an introduction of variant Transformers.[1]

FLOPs Computation

FLOPs is a measure of model complexity in deep learning.