Background: Conventional maximum likelihood approaches for sequence generation with teacher forcing algorithms are inherently prone to exposure bias at the inference stage due to the training-testing discrepancy—the generator produces a sequence iteratively conditioned on its previously predicted ones that may be never observed during training—leading to accumulative mismatch with the increment of generated sequences. In other words, the model is only trained on demonstrated behaviors (real data samples) but not free-running mode.

Generative Adversarial Networks (GANs) hold the promise of mitigating such issues for generating discrete sequences, such as language modeling, speech/music generation, etc.

Automatic Evaluation Metrics for Language Generation

A summary of the automatic evaluation metric for natural language generation (NLG) applications.

The human evaluation considers the aspects of adequacy, fidelity, and fluency, but it is quite expensive.

- Adequacy: Does the output convey the same meaning as the input sentence? Is part of the message lost, added, or distorted?

- Fluency: Is the output good fluent English? This involves both grammatical correctness and idiomatic word choices.

Thus, a useful metric for automatic evaluation in NLG applications holds the promise, such as machine translation, text summarization, image captioning, dialogue generation, poetry/story generation, etc.

Shell Command Notes

A summary of helpful bash command sheets.

Image Captioning: A Summary!

A summary of image-to-text translation.

An Introduction to Capsules

Decoding in Text Generation

Summary of common decoding strategies in language generation.

Sparse Matrix in Data Processing

It is wasteful to store zeros elements in a sparse matrix, especially for incrementally data. When constructing tf-idf and bag-of-words features or saving graph ajacent matrix, non-efficient sparse matrix storage might lead to the memory error. To circumvent this problems, efficient sparse matrix storage is a choice.

Clustering Methods: A Note

Notes of clustering approaches.

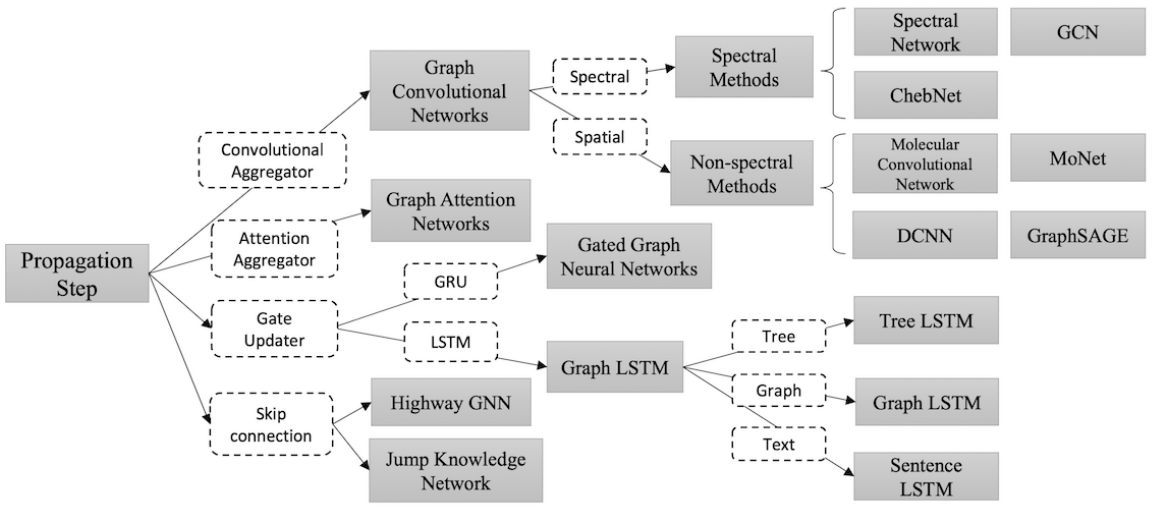

An Introduction to Graph Neural Networks

Graph Neural Networks (GNNs) has demonstrated efficacy on non-Euclidean data, such as social media, bioinformatics, etc.

Generative Adversarial Networks

GANs are widely applied to estimate generative models without any explicit density function, which instead take the game-theoretic approach: learn to generate from training distribution via 2-player games.