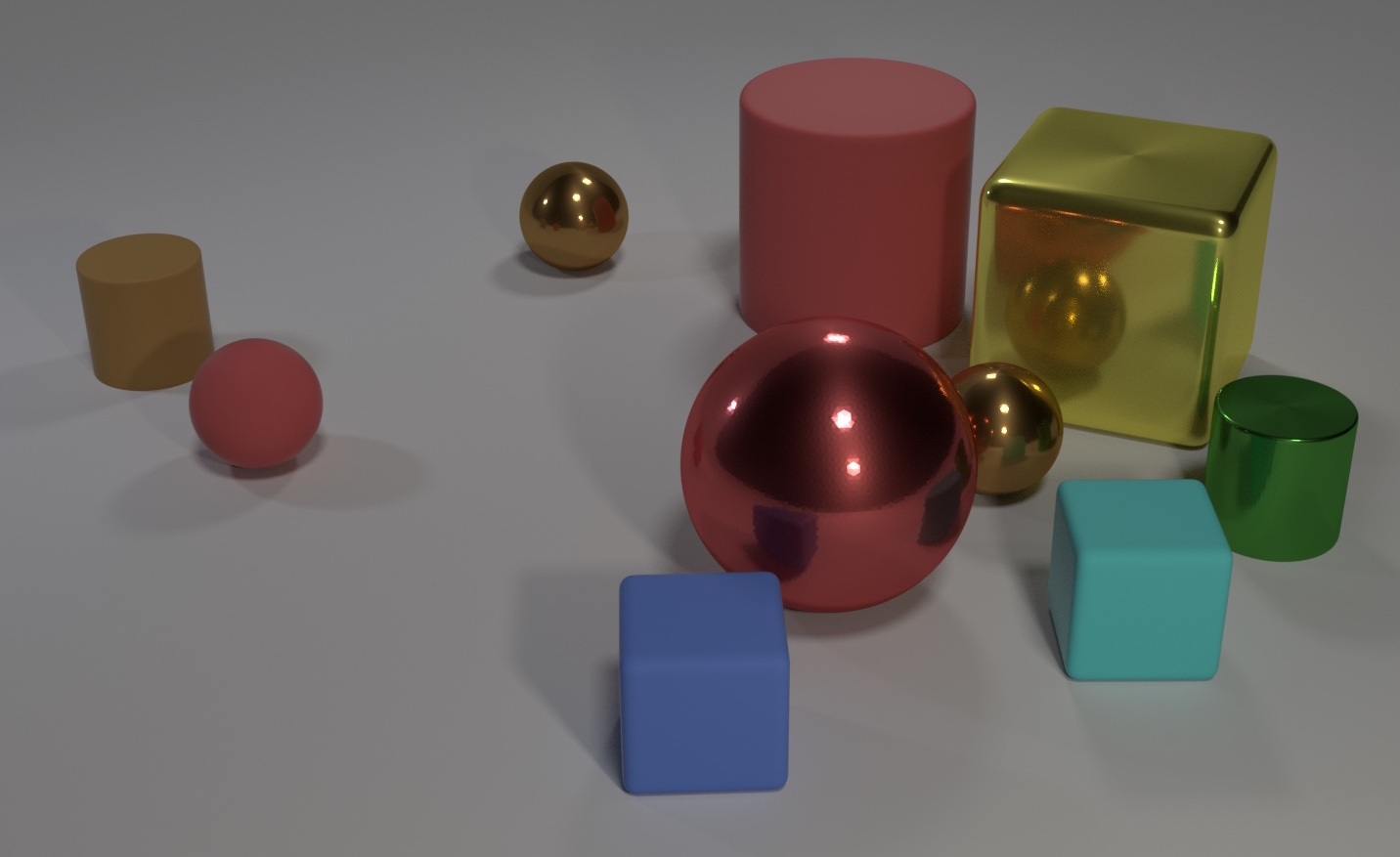

Reasoning the relations between objects and their properties is a hallmark of intelligence. Here are some notes about the relational reasoning neural networks.

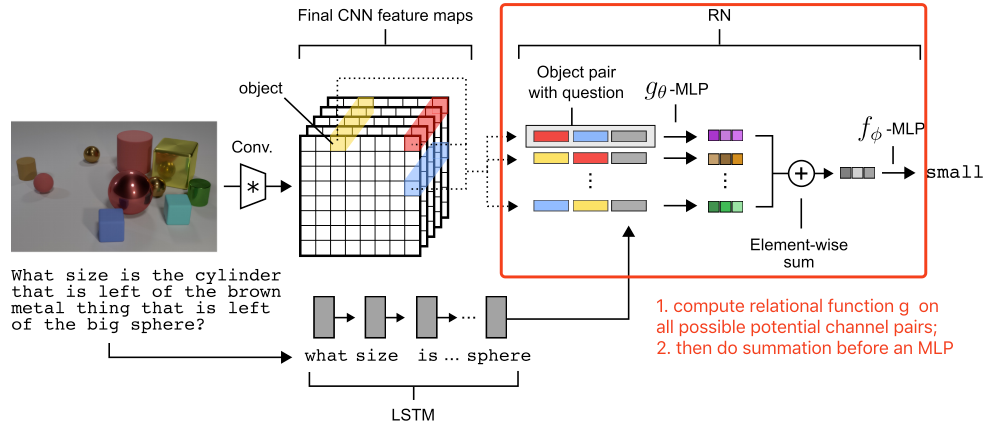

Relation Network

Relation Networks (RNs)

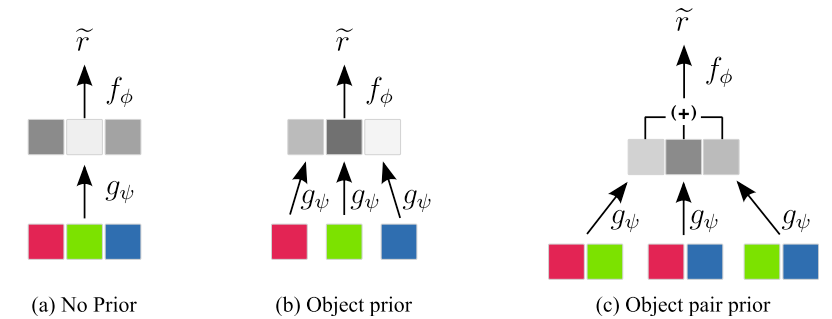

Relation Networks(RNs)[1] adopt the functional form of a neural network for relational reasoning. RNs consider the potential relations beween all object pairs.

“RNs learn to infer the existence and implications of object relations.” (Santoro et. al, 2017)[1]

where $\color{red}{\pmb{a}}$ is the aggregation function.

When we take it as summation, the simplest form is:

where

- the input is Objects

- and are two MLPs with parameters $\phi$ and $\theta$. The same MLP operates on all possible pairs. captures the representation of pair-wise relations, and integrates information about all pairs.

The summation in RN equations indicating the order (permutation) invariance of the object set. max and average pooling can be used instead.

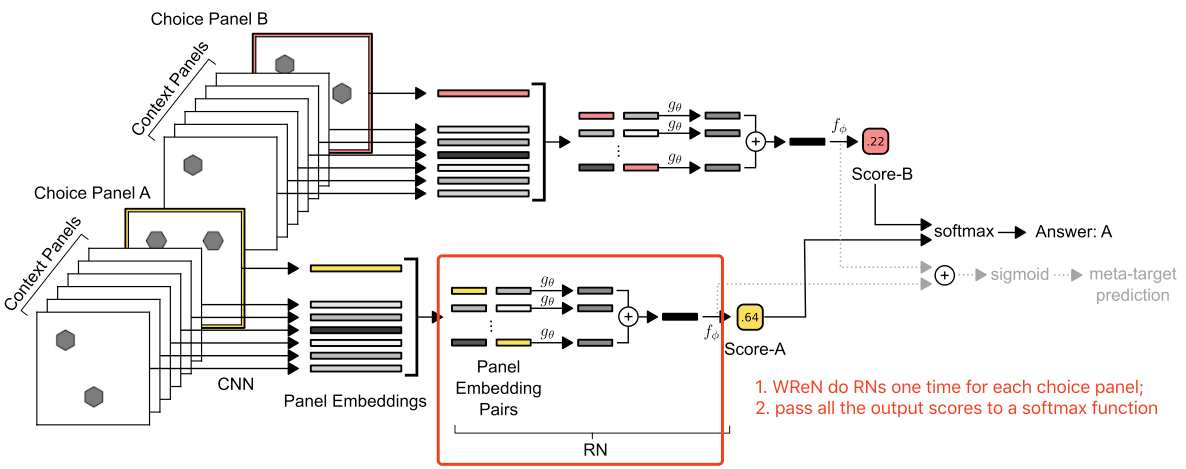

Wild Relation Network (WReN)

Wild Relation Network (WReN) do RN module multiple times to infer the inter-pannel relationships.[3] Afterward, pass all scores to a softmax function.

Visual Interaction Network(VIN)

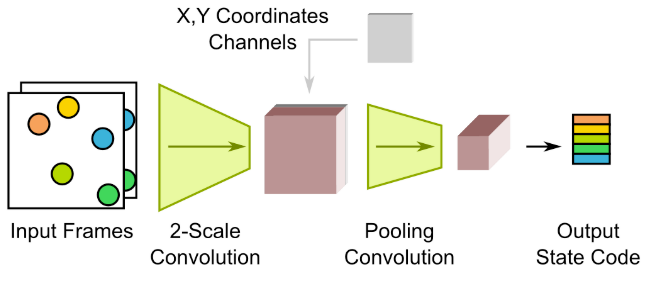

Visual Interaction Network(VIN) adopts ConvNets to encoder images. Two consecutive input frames are convolved into a state code.

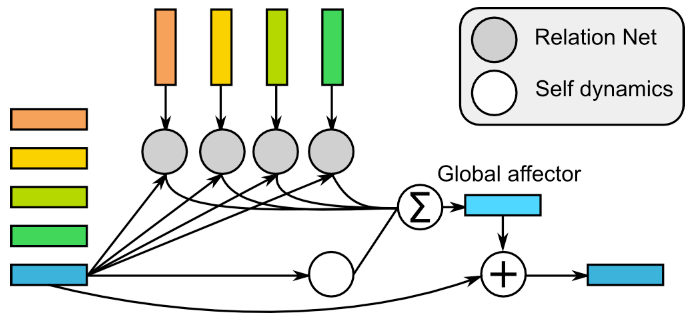

Afterward, employ RN in its Interaction Net(IN).

- For each slot, RN is applied to the slot’s concatenation with each other slot.

- Then a self-dynamics net is applied to the slot itself.

- FInally sum all the results and produce the output.

Relational Memory Core(RMC)

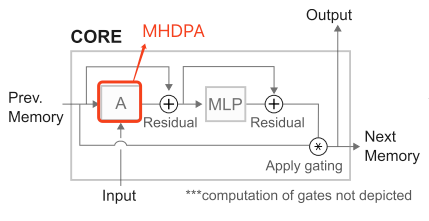

Relational Memory Core(RMC)[4] assembles LSTMs and non-local networks(i.e. Transformer).

- Encoding new memories

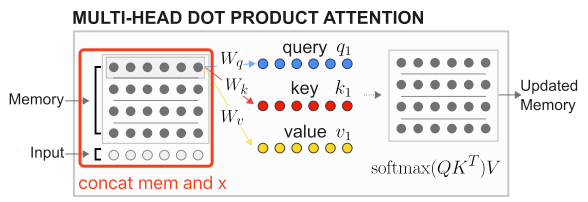

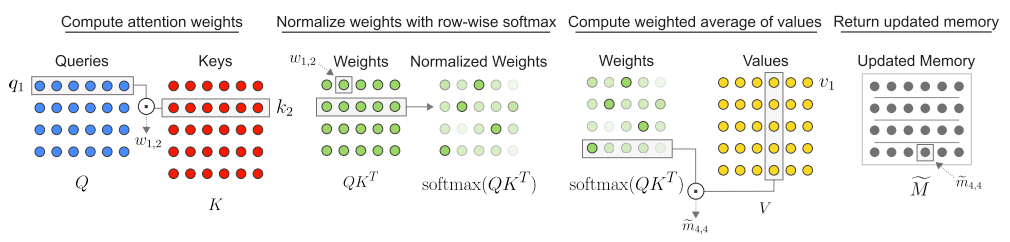

Let matrix $M$ denote stored memories with row-wise memories . RMC apply multi-head dot product attention(MHDPA) to allow memories interacting with others. $\color{green}{[M;x]}$ include memories and new observations. The output size of $\tilde{M}$ is the same as $M$.

- Introducing recurrence into variant LSTM

where is a row/memory-wise MLP with layer normalization.[4]

recurrent Memory, Attention and Composition (MAC)

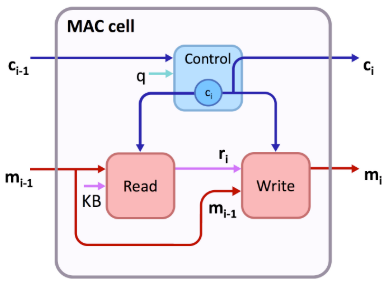

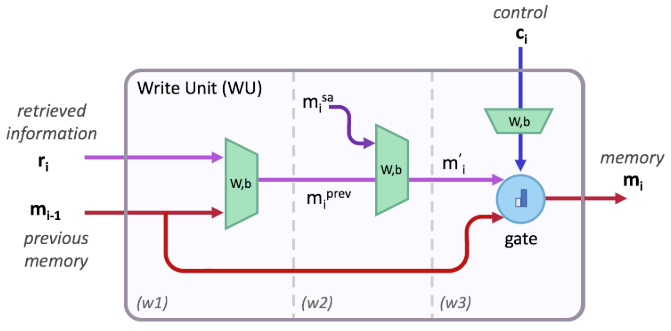

The MAC recurrent cell consists of control unit, read unit and write unit.

- control unit: attends to different parts of the task question (question)

- read unit: extacts information out of knowledge base (image in VQA task)

- write unit: integrates the retrieved information into the memory state

Input:

- concat the last states of bi-LSTM on textual questions as $\pmb{q}$

- convolve image as the knowledge base

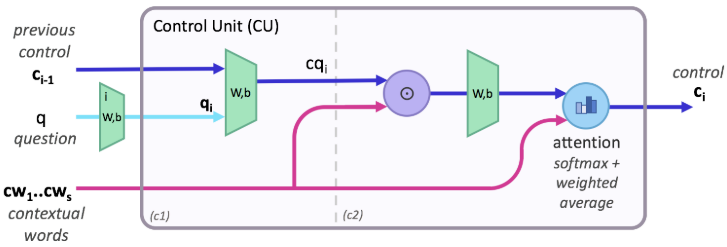

Control unit

Given the contextual question word , the question representation , the previous control state .

- Concat and and feed into a FFNN.

- Measure the similarity between and each question word ; then use a softmax layer to normalize the weights, aquiring attention distribution.

- Weighted averaging the question context words, and get current control state

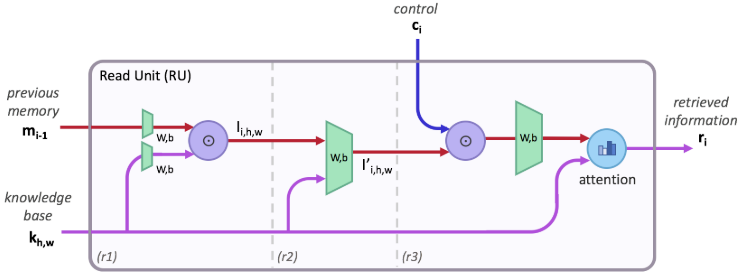

Read unit

- Interact between the knowledge-based element and memory , get

- concat and feed into a dense layer

- compute attention distribution over the knowledge base and finally do weighted average.

Write unit

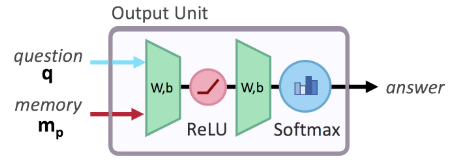

Output unit

Concat $q$ and , then pass 2-layer FFCC followed by a softmax function.

References

- 1.Santoro, A., Raposo, D., Barrett, D. G., Malinowski, M., Pascanu, R., Battaglia, P., & Lillicrap, T. (2017). A simple neural network module for relational reasoning. In Advances in neural information processing systems (pp. 4967-4976). ↩

- 2.Raposo, D., Santoro, A., Barrett, D., Pascanu, R., Lillicrap, T., & Battaglia, P. (2017). Discovering objects and their relations from entangled scene representations. arXiv preprint arXiv:1702.05068. ↩

- 3.Barrett, D. G., Hill, F., Santoro, A., Morcos, A. S., & Lillicrap, T. (2018). Measuring abstract reasoning in neural networks. arXiv preprint arXiv:1807.04225. ↩

- 4.Santoro, A., Faulkner, R., Raposo, D., Rae, J., Chrzanowski, M., Weber, T., ... & Lillicrap, T. (2018). Relational recurrent neural networks. In Advances in Neural Information Processing Systems (pp. 7299-7310). ↩

- 5.Hudson, D. A., & Manning, C. D. (2018). Compositional attention networks for machine reasoning. arXiv preprint arXiv:1803.03067. ↩