An introduction of Part-of-Speech tagging using Hidden Markov Model (HMMs).

Markov chains

Consider a sequence of state variables . A first-order Markov model instantiate two simplifying assumptions.

- The probability of a state depends only the previous state.

- The probability of an output observation depends only on the current state that produced the observation :

Hidden Markov Model

- A set of $N$ states:

- A transition probability matrix , each representing the probability of moving from state $i$ to state $j$, s.t. :

- A sequence of $T$ observations, each one drawn from a vocabulary :

- A sequence of observation likelihoods, a.k.a. emission probabilities, each expressing the probability of an observation , being generated from a state $i$:

- An initial probability distribution over states. is the prob. that the Markov chain will start in state $i$. Some state $j$ may have , meaning that they cannot be initial states. Also, :

Training with MLE

The initial probability $\pi$:

The transition probability matrix $A$ contains :

where means the count of the first word’s pos tag in bigram tuples.

The emission probability matrix $B$ contains :

Handling OOV problems

Decoding

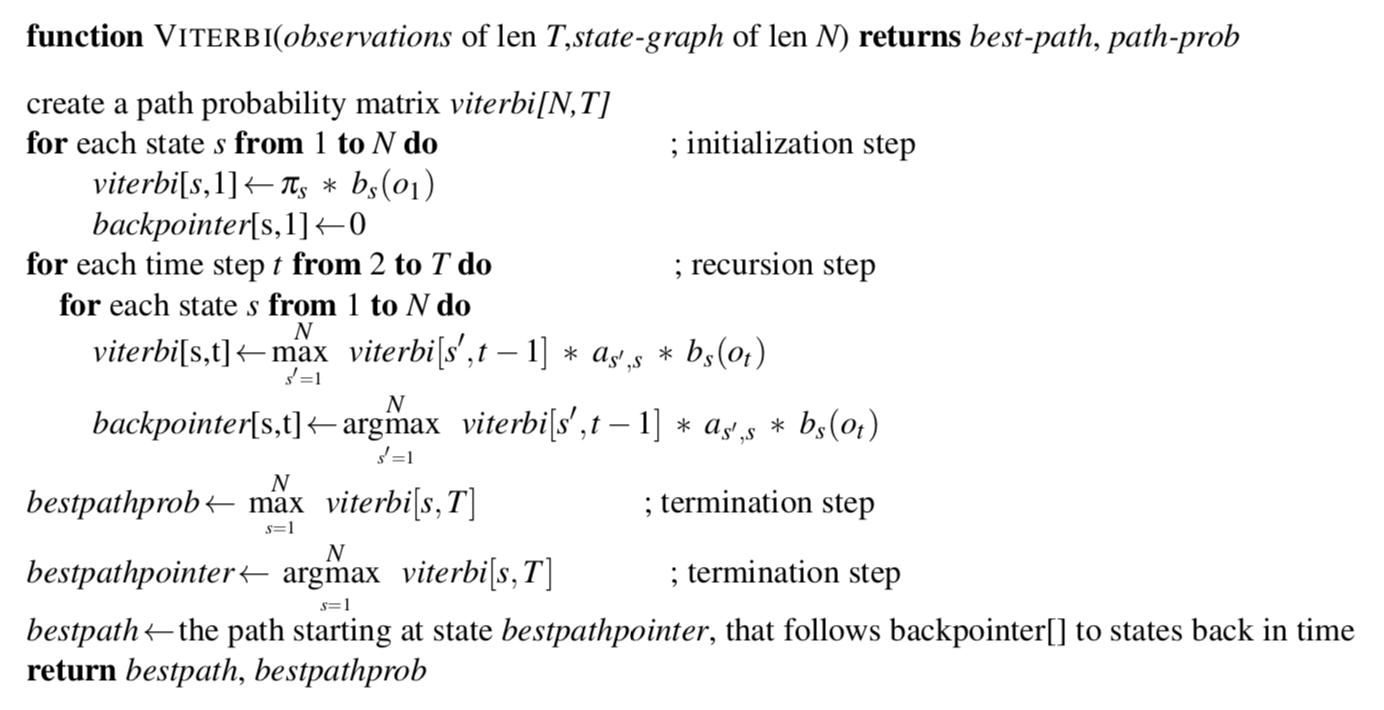

Given as input an HMM $\lambda = (A, B)$ and a sequence of observations , find the most probable sequence of states

Viterbi algorithm