Notes of lectures by D. Silver. A brief introduction of RL.

Introduction to Reinforcement Learning

Characteristics v.s. ML

- No supervisor, only a reward signal

- Feedback is delayed, not instantaneous

- Time really matters (sequential, non i.i.d data)

- Agent’s action affect the subsequent data it receives

RL problem

Rewards

- A reward is a scalar feedback signal

- Indicates how well agent is doing at step $t$

- The agent’s job is to maximize cumulative reward

RL is based on reward hypothesis, i.e. All goals can be described by the maximization of expected cumulative reward.

Sequential Decision making

- Goal: select actions to maximize total future reward

- Actions may have long term consequences

- Reward may be delayed

- It may be better to sacrifice immediate reward to gain more long-term reward

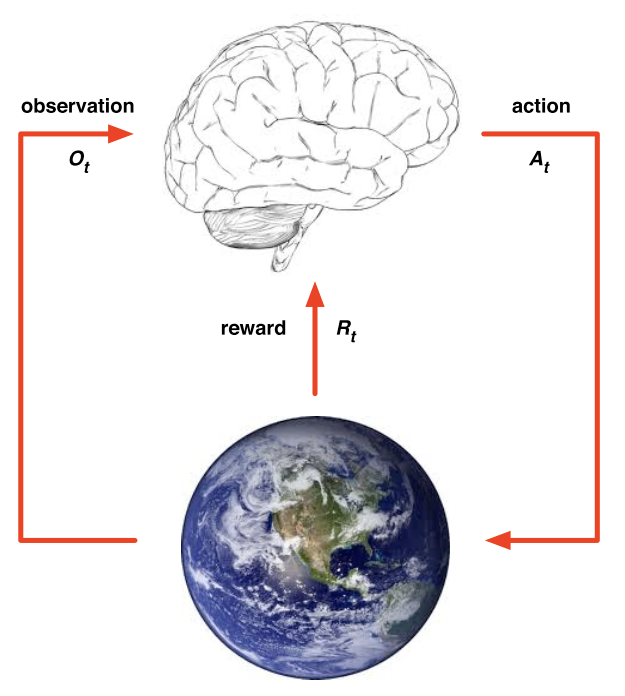

Environments

- At each time step $t$ the agent:

- executed action

- Receives observations

- Receives scalar reward

- The environment:

- receives action

- emits observation

- emits scalar reward

- $t$ increments at env. step

State

The history is the sequence of observations, actions, rewards

- i.e. all observable variables up to time $t$

- i.e. the sensorimotor stream of a robot or embodied agent

- What happens depends on the history:

- The agent selects actions

- The environment selects observations / rewards

- State is the information used to determine what happens next. Formally, state is a function of the history:

Environment state

The environment state is the environment’s private representation, i.e. whatever data the environment uses to pick the next observation / reward.

- The environment state is not usually visible to the agent. Even if is visible, it may contain irrelevant information.

Agent state

The agent state is the agent’s internal representation.

- i.e. whatever information the agent uses to pick the next action.

- i.e. it is the information used by RL algorithms.

- It can be any function of history

Information state

An information state (a.k.a.Markov state) contains all useful information from the history.

Definition: A state is Markov if and only if

- “The future is independent of the past given the present”

- Once the state is known, the history may be thrown away, i.e. the state is a sufficient statistic of the future

- The environment state is Markov

- The history is Markov

Fully observable environment

Fully observability: agent directly observes environment state;

- Agent state = environment state = information state

- Formally, this is a Markov decision process (MDP)

Partially observable environments

Partially observability: agent indirectly observes environment. e.g.:

- A robot with camera vision is not told its absolute location.

- A trading agent only observes current prices.

Not agent state $\neq$ environment state.

- Formally, this is a partially observable Markov decision process (POMDP).

- Agent must construct its own state representation , e.g.

- Complete history

- Beliefs of environment state:

- Recurrent neural net:

RL agent

Major component

- Policy: agent’s behavior function

- Value function: how good is each state and/or action

- Model: agent’s representation of the environment

Policy

- A policy is the agent’s behavior

- it is a map from state to action, e.g.

- Deterministic policy:

- Stochastic policy:

Value function

- Value function is a prediction of future reward

- Used to evaluate the goodness / badness of states

- Therefore to select between actions, e.g.

Model

A model predicts what the environment will do next

- $\mathcal{P}$ predicts the next state

- $\mathcal{R}$ predicts the next (immediate) reward, e.g.

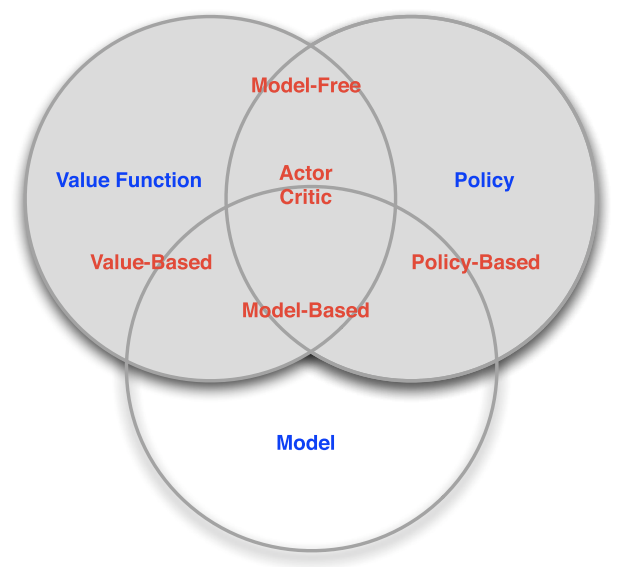

RL agent category 1

- Value based

- No policy (implicit)

- Value function

- Policy based

- Policy

- No value function

- Actor Critic

- Policy

- Value function

RL agent category 2

- Model Free

- Policy and/or Value Function

- No Model

- Model based

- Policy and/or Value Function

- Model

Problems within RL

Leaning and Planning

Two fundamental problems in sequential decision making

- Reinforcement learning

- The environment is initially unknown

- The agent interacts with the environment

- The agent improves its policy

- Planning

- A model of the environment is known

- The agent performs computations with its model (without any external interaction)

- The agents improves its policy

- a.k.a. deliberation, reasoning, introspection, pondering, thought, search

Exploration and Exploitation

- RL is like trial-and-error learning

- The agent should discover a good policy

- From its experiences of the environment

Without losing too much reward along the way

Exploration finds more information about the environment; exploitation exploits known information to maximize reward

- It is usually important to explore as well as exploit.